In this post I am going to show how we can enrich an ADF application with features like taking pictures with a Web camera. In order to get an access to the camera's stream and in an effort to show taken pictures we will use HTML5 tags video and canvas. Basically, my html code looks like the following:

On the left side we are going to show a video stream, captured from a Web camera, and on the right side we are going to show taken pictures. In order to get it all working we need some java script:

Since the ADF framework gets confused about mixing pure html tags with ADF components, we're going to access to the html page, containing video and canvas tags via af:inlineFrame. An ADF page fragment should look like this:

That's it!

<table border="1" width="900" align="center"> <tr align="center"> <td> Web Camera <div id="camera" style="height:300px; width:400px; border-style:solid;"> <video id="video" width="400" height="300"></video> </div> </td> <td> Photo Frame <div id="frame" style="height:300px; width:400px; border-style:solid;"> <canvas id="canvas" width="400" height="300" ></canvas> </div> </td> </tr> <tr align="center"> <td colspan="2"> <button id="snap" onclick="snap()">Snap Photo</button> </td> </tr> </table>And it looks like this:

On the left side we are going to show a video stream, captured from a Web camera, and on the right side we are going to show taken pictures. In order to get it all working we need some java script:

<script type="text/javascript">

var video = document.getElementById("video");

videoObj = { "video": true },

canvas = document.getElementById("canvas"),

context = canvas.getContext("2d"),

frame = document.getElementById("frame");

errorBack = function (error) {

alert(error);

};

//This function is going to be invoked on page load

function setupCamera()

{

if(navigator.getUserMedia) {

navigator.getUserMedia(videoObj, standardVideo, errorBack);

} else if(navigator.webkitGetUserMedia) {

navigator.webkitGetUserMedia(videoObj, webkitVideo, errorBack);

}

else if(navigator.mozGetUserMedia) {

navigator.mozGetUserMedia(videoObj, mozillaVideo, errorBack);

}

}

//Different functions for different browsers

standardVideo = function(stream) {

video.src = stream;

video.play(); }

webkitVideo = function(stream){

video.src = window.webkitURL.createObjectURL(stream);

video.play(); }

mozillaVideo = function(stream){

video.src = window.URL.createObjectURL(stream);

video.play(); }

//onClick for the snap button

function snap()

{

//Take a picture from video stream

context.drawImage(video,0,0,400,300);

//Add a css3 class style to get a "sliding" effect

canvas.className="animated";

//Add s listener to remove "animating" class style

//at the end of animation

canvas.addEventListener("animationend", animationEnd, false);

}

function animationEnd(e)

{//Finish the animation

canvas.className="normal";

//Set up taken picture as div's background

frame.style.backgroundImage='url('+canvas.toDataURL("image/png")+')';

}

//on load

window.addEventListener("DOMContentLoaded",setupCamera(), false);

</script>

We use some CSS3 animation in order to get a "slide" effect. So, the picture is going to slide from the video stream to the Photo Frame: <style type="text/css">

@keyframes slide

{

from {left:-440px;}

to {left:0px;}

}

.animated

{

position:relative;

animation:slide 0.5s ease-in;

-webkit-animation: slide 0.5s ease-in;

}

.normal

{

}

</style>

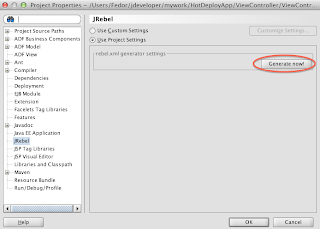

So, you can play with the result of our work here.Since the ADF framework gets confused about mixing pure html tags with ADF components, we're going to access to the html page, containing video and canvas tags via af:inlineFrame. An ADF page fragment should look like this:

<af:panelStretchLayout id="psl1">

<f:facet name="bottom">

<af:panelGroupLayout layout="horizontal" id="pgl1">

<af:commandButton text="Submit" id="cb1" action="submit">

<af:clientListener type="action" method="takePicture"/>

<af:serverListener type="takePicture"

method="#{TakePictureBean.takePicture}" />

</af:commandButton>

<af:commandButton text="Cancel" id="cb2" action="cancel"/>

</af:panelGroupLayout>

</f:facet>

<f:facet name="center">

<af:inlineFrame id="iframe"

source="Snapshot.html"

styleClass="AFStretchWidth"

/>

</f:facet>

</af:panelStretchLayout>

Let's pay attention to the Submit button. Actually, when we click this button, we're going to grab taken picture, send the image to the server and set it as a value of a BLOB entity attribute. So, let's look at the client side first. The code of the button's client listener:function takePicture(actionEvent)

{ //We are doing all this stuff just in order

// to get access to the inlineFrame's content

var source = actionEvent.getSource();

var ADFiframe = source.findComponent("iframe");

var iframe = document.getElementById(ADFiframe.getClientId()).firstChild;

var innerDoc = (iframe.contentDocument) ?

iframe.contentDocument :

iframe.contentWindow.document;

//Finally we can work with our canvas,

//containing taken picture

var canvas = innerDoc.getElementById("canvas");

//Convert the image into the DataURL string and

//send it to the server with a custom event

AdfCustomEvent.queue(source, "takePicture",

{picture : canvas.toDataURL("image/png")}, true);

}

On the server side we're going to listen to the takePicture event. So, the code of the server listener:public void takePicture(ClientEvent clientEvent)

throws SQLException

{

//Convert the image into an array of bytes

String picture = (String) clientEvent.getParameters().get("picture");

String prefix = "base64,";

int startIndex = picture.indexOf(prefix) + prefix.length();

byte[] imageData = Base64.decodeBase64(picture.substring(startIndex).getBytes());

//Find an attribute binding pointing to a BLOB entity attribute

BindingContext bc = BindingContext.getCurrent();

DCBindingContainer dcb = (DCBindingContainer) bc.getCurrentBindingsEntry();

AttributeBinding attr = (AttributeBinding)dcb.getControlBinding("Image");

//And set an attribute's value

attr.setInputValue(new BlobDomain(imageData));

}

You can download a sample application for this post. It requires JDeveloper 11gR2. That's it!